The AI Treadmill

I was recently poking around with some of my old code. It is a daily newsletter that is AI-generated. It reads the news for the day and then emails me a newsletter of things I should care about as a PM.

Even though it wasn’t that long ago that I built this, it is crazy how things have changed. GPT-4 wasn’t out yet, and the OpenAI API didn't offer web search. LangChain was around but was still in its infancy. I also didn't understand how to use LLM chains or that they were called chains. Despite those limitations, I was able to build something that has run for the last eight months.

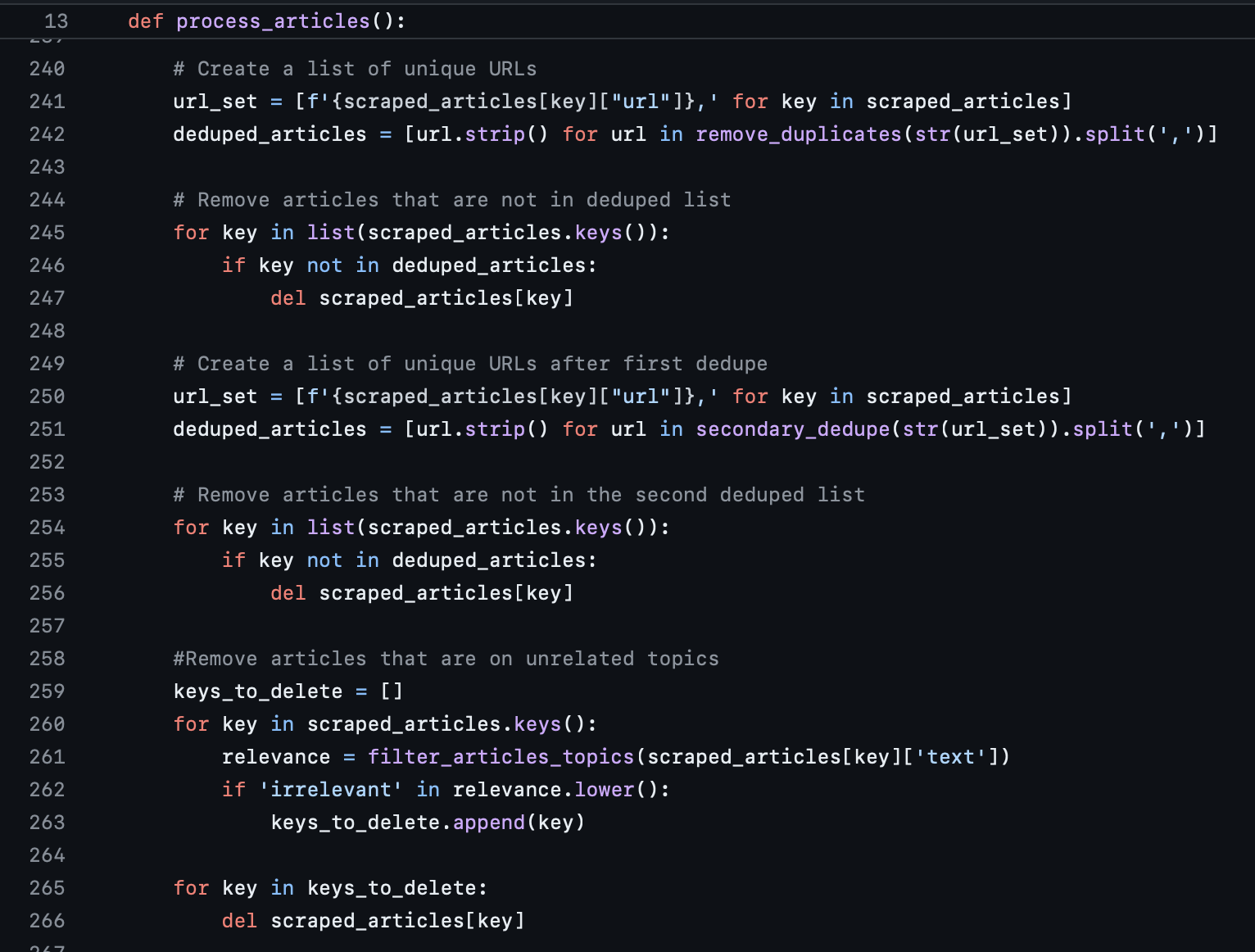

This code is centralized around a chain (I built one without realizing it ⬆️). I built it using Python and many calls to GPT 3.5. It is also packed with super-specific prompts around output formats. And there are lots of guardrails and output cleanup functions. It was the best I could do at the time.

But if I had built this today, I could have done the entire thing using Langchain and the Perplexity API. Hundreds of lines of code could be condensed and cleaned up. The end result would likely be better. This is where I get stuck in a battle about what to do. It would likely be better, but the only way to figure that out is by rebuilding it. But is it worth rebuilding if it is already getting the job done? How big of a leap in quality is needed to justify the time spent refactoring and testing?

Soon, OpenAI might be able to do all this for me with a few prompts. Perplexity recently launched a daily podcast covering the news. How long until it can create one that is specific to me? Langchain is only on v0.2, I can’t imagine what will be in v1.0. We should be looking forward to all these things and building using all of them. The question is, how much time do we spend upgrading the old stuff versus building new things?

This is what it feels like to be on the AI treadmill. Things are moving so fast, and it is a struggle to keep up. As our codebase expands, we need to ask ourselves how much time we spend going back and updating things with these new capabilities. And if we spend the time now, how much time does that buy us before we need to do it again?

This problem is a reflection of our time. We are living in an incredible age of rapid progress. New, powerful capabilities are being released daily. Without a specific strategy and benchmarks, we could be stuck in place. Trying to pack our existing AI features with the latest capabilities, never getting the time needed to build our next big thing. Eventually, things will stabilize. At this point, you can use most standard code libraries without worry of drastic changes or things breaking. The same cannot be said for AI, every release is a step change - going from Langchain v0.01 to v0.02 has unlocked so much (and broken so much). Until we get to that place of stability, the question is, do you run in place or keep pushing ahead. I don't know if there is a right answer, as long as you are aware of the risk.

Some people love running on the treadmill, and others love getting outside and exploring new trails. Neither is right, it all depends on what your goals are. This age of AI offers us a similar path, we can stay on the treadmill and continually refine a small set of AI features. Or we can push ahead and build bigger things with the latest capabilities.